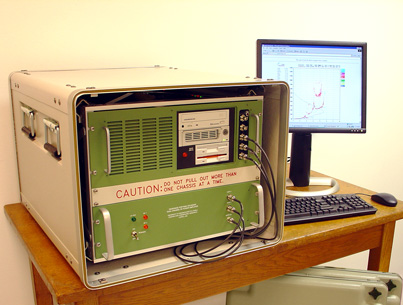

Figure 1-1A DigisondeTM Portable Sounder |

Figure

1-1B Magnetic Loop Turnstile Antenna |

Noteworthy

new technology involved in this system includes:

-

Electronically

switched active crossed loop receiving antenna

-

Commercially

sourced 10 MIPS TMS 320C25 digital signal processor

(DSP)

-

4

million sample DSP buffer memory

-

71

to 110 MHz digital synthesizer on a 4"x5"

card

-

Compact

DC-DC converters allowing operation on one battery

-

Four-channel

high speed (1 million 12-bit samples/sec) digitizer

board

-

A

160 Mbits/sec parallel data bus between the digitizer

and the DSP

-

A

proprietary multi-tasking operating system for remote

interaction via a modem connection without suspending

system operation

-

Direct

digital synthesized coherent oscillators

-

21

dB signal processing gain from phase coded pulse compression

-

21

dB additional signal processing gain from coherent

Doppler integration

-

Automatic

ionospheric layer identification and parameter scaling

by an embedded expert system

The

availability of a small low power ionosonde that could be

operated on-site wherever a high frequency (HF) radio or

radar was in use, would greatly increase the value of the

information produced by the instrument since it would become

available to the end user immediately.

One

of the chief applications for the real-time data currently

provided by digital ionospheric sounders is to manage the

operation of HF radio channels and networks. Since many

HF radios are operated at remote locations (i.e., aircraft,

boats, land vehicles of all sorts, and remote sites where

telephone service is unreliable) the major obstacle to making

practical use of the ionospheric sounder data and associated

computed propagation information is the dissemination of

this data to a data processing and analysis site. Since

HF is often used where no alternative communications link

exists, or is held in reserve in case primary communication

is lost, it is not practical to assume that a communications

link exists to make centrally tabulated real-time ionospheric

data available to the user. Furthermore, local measurements

are superior to measurements at sites of opportunity in

the user’s general region of the globe since extreme

variations in ionospheric properties are possible even over

short distances, especially at high latitudes [Buchau et

al., 1985; Buchau and Reinisch, 1991] or near the sunset

or sunrise terminator.

However,

for most applications, the size, weight, power consumption

and cost of a conventional ionospheric sounder have made

local measurements impractical. Therefore the availability

of a small, low cost sounder is a major improvement in the

usefulness of ionospheric sounder data. Shrinking the conventional

1 to 50 kW pulse sounders to a portable, battery operated

100 to 500 W system requires the application of substantial

signal processing gain to compensate for the 20 dB reduction

in transmitter power. Furthermore, a compact portable package

requires the use of highly integrated control, data acquisition,

timing, data processing, display and storage hardware.

The

objective of the DPS development project was to develop

a small vertical incidence (i.e., monostatic) ionospheric

sounder which could automatically collect and analyze ionospheric

measurements at remote operating sites for the purpose of

selecting optimum operating frequencies for obliquely propagated

communication or radar propagation paths. Intermediate objectives

assumed to be necessary to produce such a capability were

the development of optimally efficient waveforms and of

functionally dense signal generation, processing and ancillary

circuitry. Since the need for an embedded general purpose

computer was a given imperative, real-time control software

was developed to incorporate as many functions as was feasible

into this computer rather than having to provide additional

circuitry and components to perform these functions. The

DPS duplicates all of the functions of its predecessor the

DigisondeTM 256 [Bibl et al., 1981] and [Reinisch,

1987] in a much smaller, low power package. These include

the simultaneous measurement of seven observable parameters

of reflected (or in oblique incidence, refracted) signals

received from the ionosphere:

1)

Frequency

2) Range (or height for vertical incidence measurements)

3) Amplitude

4) Phase

5) Doppler Shift and Spread

6) Angle of Arrival

7) Wave Polarization

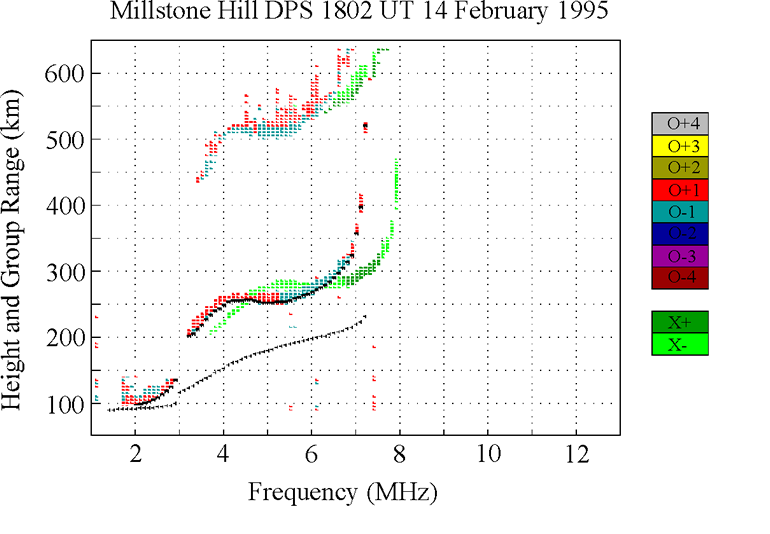

Because

the physical parameters of the ionospheric plasma affect

the way radio waves reflect from or pass through the ionosphere,

it is possible by measuring all of these observable parameters

at a number of discrete heights and discrete frequencies

to map out and characterize the structure of the plasma

in the ionosphere. Both the height and frequency dimensions

of this measurement require hundreds of individual measurements

to approximate the underlying continuous functions. The

resulting measurement is called an ionogram and comprises

a seven dimensional measurement of signal amplitude vs.

frequency and vs. height as shown in Figure 1-2 (due to

the limitations of current software only five may be displayed

at a time). Figure 1-2 is a five-dimensional display, with

sounding frequency as the abscissa, virtual reflection height

(simple conversion of time delay to range assuming propagation

at 3x108 m/sec) as the ordinate, signal amplitude

as the spot (or pixel) intensity, Doppler shift as the color

shade and wave polarization as the color group (the blue-green-grey

scale or "cool" colors showing extraordinary polarization,

the red-yellow-white scale or "hot" colors showing

ordinary polarization).

Figure

1-2 Five-Dimensional Ionogram

Another

objective of the DPS development was to store the data created

by the system in an easily accessible format (e.g., DOS

formatted personal computer files), while maintaining compatibility

with the existing base of DigisondeTM sounder

analysis software in use at the UMLCAR and at over 40 research

institutes around the world. This objective often competed

with the additional objective of providing an easily accessible

and simply understood standard data format to facilitate

the development of novel post-processing analysis and display

programs.

Ionospheric

Propagation of Electromagnetic Waves back

to top

An

ionospheric sounder uses basic radar techniques to detect

the electron density (equal to the ion density since the

bulk plasma is neutral) of ionospheric plasma as a function

of height. The ionospheric plasma is created by energy from

the sun transferred by particles in the solar wind as well

as direct radiation (especially ultra-violet and x-rays).

Each component of the solar emissions tends to be deposited

at a particular altitude or range of altitudes and therefore

creates a horizontally stratified medium where each layer

has a peak density and to some degree, a definable width,

or profile. The shape of the ionized layer is often referred

to as a Chapman function [Davies, 1989] which is a roughly

parabolic shape somewhat elongated on the top side. The

peaks of these layers usually form between 70 and 300 km

altitude and are identified by the letters D, E, F1 and

F2, in order of their altitude.

By

scanning the transmitted frequency from 1 MHz to as high

as 40 MHz and measuring the time delay of any echoes (i.e.,

apparent or virtual height of the reflecting medium) a vertically

transmitting sounder can provide a profile of electron density

vs. height. This is possible because the relative refractive

index of the ionospheric plasma is dependent on the density

of the free electrons (Ne), as shown in Equation

1-1 (neglecting the geomagnetic field):

m2(h)= 1 – k (Ne/f2)

(1–1)

where k

= 80.5, Ne is electrons/m3, and f

is in Hz [Davies, 1989; Chen, 1987].

The

behavior of the plasma changes significantly in the presence

of the Earth’s magnetic field. An exhaustive derivation

of m [Davies, 1989] results in the Appleton Equation for

the refractive index, which is one of the fundamental equations

used in the field of ionospheric propagation. This equation

clearly shows that there are two values for refractive index,

resulting in the splitting of a linearly polarized wave

incident upon the ionosphere, into two components, known

as the ordinary and extraordinary waves. These propagate

with a different wave velocity and therefore appear as two

distinct echoes. They also exhibit two distinct polarizations,

approximately right hand circular and left hand circular,

which aid in distinguishing the two waves.

When

the transmitted frequency is sufficient to drive the plasma

at its resonant frequency there is a total internal reflection.

The plasma resonance frequency (fp) is defined

by several constants, e – the charge of an electron,

m – the mass of an electron, eo

– the permittivity of free space, but only one variable,

Ne – electron density in electrons/m3

[Chen, 1987]:

fp2 = (Ne e2/4peom) = kNe (1-2)

A

typical number for the F-region (200 to 400 km altitude)

is 1012 electrons/m3, so the plasma

resonance frequency would be 9 MHz. The value of m

in Equation 1–1 approaches 0 as the operating frequency,

f, approaches the plasma frequency. The group velocity of

a propagating wave is proportional to m,

so

m

= 0 implies that the wave slows down to zero which is obviously

required at some point in the process of reflection since

the propagation velocity reverses.

The

total internal reflection from the ionosphere is similar

to reflection of radio frequency (RF) energy from a metal

surface in that the re-radiation of the incident energy

is caused by the free electrons in the medium. In both cases

the wave penetrates to some depth. In a plasma the skin

depth (the depth into the medium at which the electric field

is 36.8% of its incident amplitude) is defined by:

l0/2p

d = ---------- (1-3)

where l0 is

the free space wavelength.

The

major difference between ionospheric reflection and reflection

from a metallic surface is that the latter has a uniform

electron density while the ionospheric density increases

roughly parabolically with altitude, with densities starting

at essentially zero at stratospheric altitudes and rising

to a peak at about 200 to 400 km. In the case of a metal

there is no region where the wave propagates below the resonance

frequency, while in the ionosphere the refractive index

and therefore the wave velocity change with altitude until

the plasma resonance frequency is reached. Of course if

the RF frequency is above the maximum plasma resonance frequency

the wave is never reflected and can penetrate the ionosphere

and propagate into outer space. Otherwise what happens on

a microscopic scale at the surface of a metal and on a macroscopic

scale at the plasma resonance in the ionosphere is very

similar in that energy is re-radiated by electrons which

are responding to the incident electric field.

Coherent Integration

back to top

During

the 1960’s and 1970’s several variations in sounding

techniques started moving significantly beyond the basic

pulse techniques developed in the 1930’s. First was

the coherent integration of several pulses transmitted at

the same frequency. Two signals are coherent if, having

a phase and amplitude, they are able to be added together

(e.g., one radar pulse echo received from a target added

to the next pulse echo received from the same target, thousandths

of a second later) in such a way that the sum may be zero

(if the two signals are exactly out of phase with each other)

or double the amplitude (if they are exactly in phase).

Coherent integration of N signals can provide a factor of

N improvement in power. This technique was first used in

the DigisondeTM 128 [Bibl and Reinisch, 1975].

In

ionospheric sounding, the motion of the ionosphere often

makes it impossible to integrate by simple coherent summation

for longer that a fraction of a second, although it is not

rare to receive coherent echoes for tens of seconds. However,

with the application of spectral integration (which is a

byproduct of the Fourier transform used to create a Doppler

spectrum) it is possible to coherently integrate pulse echoes

for tens of seconds under nearly all ionospheric conditions

[Bibl and Reinisch, 1978]. The integration may progress

for as long a time as the rate of change of phase

remains constant (i.e., there is a constant Doppler shift,

Df).

The DigisondeTM 128PS, and all subsequent versions

perform this spectral integration.

Additional

detail on this topic is contained in Chapter 2 in this section.

Coded Pulses

to Facilitate Pulse Compression Radar Techniques back

to top

A

third general technique to improve on the simple pulse sounder

is to stretch out the pulse by a factor of N, thus increasing

the duty cycle so the pulse contains more energy without

requiring a higher power transmitter (power x time = energy).

However, to maintain the higher range resolution of the

simple short pulse the pulse can be bi-phase, or phase reversal

modulated with a phase code to enable the receiver to create

a synthetic pulse with the original (i.e., that of the short

pulse) range resolution. A network of sounders using a 13-bit

Barker Code were operated by the U.S. Navy in the 1960’s.

The

critical factor in the use of pulse compression waveforms

for any radar type measurement is the correlation properties

of the internal phase code. Phase codes proposed and experimented

with included the Barker Code [Barker, 1953], Huffman Sequences

[Huffman 1962], Convoluted Codes [Coll, 1961], Maximal Length

Sequence Shift Register Codes (M-codes) [Sarwate and Pursley,

1980], or Golay’s Complementary Sequences [Golay, 1961],

which have been implemented in the VHF mesospheric sounding

radar at Ohio State University [Schmidt et al., 1979] and

in the DPS. The internal phase code alternative has just

recently become economically feasible with the availability

of very fast microprocessor and signal processor IC’s.

Barker Coded pulses have been implemented in several ionospheric

sounders to date, but until the DPS was developed there

have been no other successful implementations of Complementary

Series phase codes in ionospheric sounders.

The

European Incoherent Scatter radar in Tromso, Norway (VanEiken,

1991 and 1993) and an over-the-horizon (OTH) HF radar used

the Complementary Series codes. However most major radar

systems including all currently active OTH radars opted

for the FM/CW chirp technique, due to its resistance to

Doppler induced leakage and its compatibility with analog

pulse compression processing techniques. Basically, the

chirp waveform avoids the need for extremely fast digital

processing capabilities, since only the final stage is performed

digitally, while the pulse compression is best performed

entirely digitally. Even at the modest bandwidths used for

ionospheric sounding, this digital capability was until

recently, much more expensive and cumbersome than the special

synthesizers required for chirpsounding.

Another

new development in the 1970’s was the coherent multiple

receiver array [Bibl and Reinisch, 1978] which allows angle

of arrival (incidence angle) to be deduced from phase differences

between antennas by standard interferometer techniques.

Given a known operating frequency, and known antenna spacing,

by measuring the phase or phase difference on a number of

antennas, the angle of arrival of a plane wave can be deduced.

This interferometry solution is invalid, however, if there

are multiple sources contributing to the received signal

(i.e., the received wave therefore does not have a planar

phase front). This problem can be overcome in over 90% of

the cases as was first shown with the DigisondeTM

256 [Reinisch et al., 1987] by first isolating or discriminating

the multiple sources in range, then in the Doppler domain

(i.e., isolating a plane wavefront) before applying the

interferometry relationships.

Except

for the FM/CW chirpsounder which operates well on transmitter

power levels of 10 to 100 W (peak power) the above techniques

and cited references typically employ a 2 to 30 kW peak

power pulse transmitter. This power is needed to get sufficient

signal strength to overcome an atmospheric noise environment

which is typically 20 to 50 dB (CCIR Noise Tables) above

thermal noise (defined as kTB, the theoretical minimum noise

due to thermal motion, where k = Boltzman’s constant,

T = temperature in °

K, and

B = system bandwidth in Hz). More importantly, however,

since ionogram measurements require scanning of the entire

propagating band of frequencies in the 0.5 to 20 MHz RF

band (up to 45 MHz for oblique measurements), the sounder

receiver will encounter broadcast stations, ground-to-air

communications channels, HF radars, ship-to-shore radio

channels and several very active radio amateur bands which

can add as much as 60 dB more background interference. Therefore,

the sounder signal must be strong enough to be detectable

in the presence of these large interfering signals.

To

make matters worse, a pulse sounder signal must have a broad

bandwidth to provide the capability to accurately measure

the reflection height, therefore the receiver must have

a wide bandwidth, which means more unwanted noise is received

along with the signal. The noise is distributed quite evenly

over bandwidth (i.e., white), while interfering signals

occur almost randomly (except for predictably larger probabilities

in the broadcast bands and amateur radio bands) over the

bandwidth. Thus a wider-bandwidth receiver receives proportionally

more uniformly distributed noise and the probability of

receiving a strong interfering signal also goes up proportionally

with increased bandwidth.

The

DPS transmits only 300 W of pulsed RF power but compensates

for this low power by digital pulse compression and coherent

spectral (Doppler) integration. The two techniques together

provide about 30 dB of signal processing gain (up to 42

dB for the bi-static oblique waveforms) thus for vertical

incidence measurements the system performs equivalently

with a simple pulse sounder of 1000 times greater power

(i.e., 300 kW).

Additional

detail on this topic is contained in Chapter 2 in this section.

Current Applications

of Ionospheric Sounding back to top

Current

applications of ionospheric sounders fall into two categories:

a.

Support of operational systems, including shortwave radio

communications and OTH radar systems. This support can

be in the form of predictions of propagating frequencies

at given times and locations in the future (e.g., over

the ensuing month) or the provision of real-time updates

(updated as frequently as every 15 minutes) to detect

current conditions such that system operating parameters

can be optimized.

b.

Scientific research to enable better prediction of ionospheric

conditions and to understand the plasma physics of the

solar-terrestrial interaction of the Earth’s atmosphere

and magnetic field with the solar wind.

There

has been considerable effort in producing global models

of ionospheric densities, temperature, chemical constitution,

etc, such that a few sounder measurements could calibrate

the models and improve the reliability of global predictions.

It has been shown that if measurements are made within a

few hundred kilometers of each other, the correlation of

the measured parameters is very high [Rush, 1978]. Therefore

a network of sounders spaced by less than 500 km can provide

reliable estimates of the ionosphere over a 250 km radius

around them.

The

areas of research pursued by users of the more sophisticated

features of the DigisondeTM sounders include

polar cap plasma drift, auroral phenomena, equatorial spread-F

and plasma irregularity phenomena, and sporadic E-layer

composition [Buchau et al., 1985; Reinisch 1987; and Buchau

and Reinisch 1991]. There may be some driving technological

needs (e.g., commercial or military uses) in some of these

efforts, but many are simply basic research efforts aimed

at better understanding the manifestations of plasma physics

provided by nature.

Requirements

for a Small Flexible Sounding System back to

top

The detailed

design and synthesis of a RF measurement system (or any

electronic system) must be based on several criteria:

a. The

performance requirements necessary to provide the needed

functions, in this case scientific measurements of electron

densities and motions in the ionosphere.

b. The

availability of technology to implement such a capability.

c. The

cost of purchasing or developing such technology.

d. The

risk involved in depending on certain technologies, especially

if some of the technology needs to be developed.

e. The

capabilities of the intended user of the system, and its

expected willingness to learn to use and maintain it;

i.e., how complicated can the operation be before the

user will give up and not try to learn it.

The question

of what technology can be brought to bear on the realization

of a new ionospheric sounder was answered in a survey of

existing technology in 1989, when the portable sounder development

started in earnest. This survey showed the following available

components, which showed promise in creating a smaller,

less costly, more powerful instrument. Many of these components

were not available when the last generation of DigisondesTM

(circa 1980) was being developed:

Solid-state

300 W MOSFET RF power transistors

High-speed

high precision (12, 14 and 16 bit) analog to digital

(A–D) converters

High-speed

high precision (12 and 16 bit) digital to analog (D–A)

converters

Single

chip Direct Digital Synthesizers (DDS)

Wideband

(up to 200 MHz) solid state op amps for linear feedback

amplifiers

Wideband

(4 octaves, 2–32 MHz) 90°

phase shifters

Proven

DigisondeTM 256 measurement techniques

Very

fast programmable DSP (RISC) IC’s

Fast,

single board, microcomputer systems and supporting programming

languages

Many

of these components are inexpensive and well developed because

they feed a mass market industry. The MOSFET transistors are

used in Nuclear Magnetic Resonance medical imaging systems to

provide the RF power to excite the resonances. The high speed

D–A converters are used in high resolution graphic video

display systems such as those used for high performance workstations.

The DDS chips are used in cellular telephone technology, in

which the chip manufacturer, Qualcomm, is an industry leader.

The DSP chips are widely used in speech processing, voice recognition,

image processing (including medical instrumentation). And of

course, fast microcomputer boards are used by many small systems

integrators which end up in a huge array of end user applications

ranging from cash registers to scientific computing to industrial

process controllers.

The

performance parameters were well known at the beginning of the

DPS development, since several models of ionospheric pulse sounders

had preceded it. The frequency range of 1 to 20 MHz for vertical

sounding was an accepted standard, and 2 to 30 MHz was accepted

as a reasonable range for oblique incidence measurements. It

was well known that radio waves of greater than 30 MHz often

do propagate via skywave paths, however, most systems relying

on skywave propagation don’t support these frequencies,

so interest in this frequency band would only be limited to

scientific investigations. A required power level in the 5 to

10 kW range for pulse transmitters had provided good results

in the past. The measurement objectives were to simultaneously

measure all seven observable parameters outlined at Paragraph

107 above in order to characterize the following physical features:

The height

profile of electron density vs. altitude

Position

and spatial extent of irregularity structures, gradients and

waves

Motion vectors

of structures and waves

As

mentioned in the section above dealing with Current Applications

of Ionospheric Sounding (Paragraph 127 et seq. above),

the accurate measurement of all of the parameters, except frequency

(it being precisely set by the system and need not be measured)

depends heavily on the signal to noise ratio of the received

signal. Therefore vertical incidence ionospheric sounders capable

of acquiring high quality scientific data have historically

utilized powerful pulse transmitters in the 2 to 30 kW range.

The necessity for an extremely good signal to noise ratio is

demanded by the sensitivity of the phase measurements to the

random noise component added to the signal level. For instance,

to measure phase to 1 degree accuracy requires a signal to noise

ratio better than 40 dB (assuming a Gaussian noise distribution

which is actually a best case), and measurement of amplitude

to 10% accuracy requires over 20 dB signal to noise ratio. Of

course, is it desirable that these measurements be immune to

degradation from noise and interference and maintain their high

quality over a large frequency band. This requires that at the

lower end of the HF band the system’s design has to overcome

absorption, noise and interference, and poor antenna performance

and still provide at least a 20 to 40 dB signal to noise ratio.

METHODOLOGY,

THEORETICAL BASIS AND IMPLEMENTATION back to

top

General

The

VIS/DPS borrows several of the well proven measurement techniques

used by the DigisondeTM 256 sounder described in

[Bibl, et al, 1981; Reinisch et al., 1989] and [Reinisch, 1987],

which has been produced for the past 12 years by the UMLCAR.

The addition of digital pulse compression in the DPS makes the

use of low power feasible, the implementation in software of

processes that were previously implemented in hardware results

in a much smaller physical package, and the high level language

control software and standard PC-DOS (i.e., IBM/PC) data file

formats provide a new level of flexibility in system operation

and data processing.

A technical

description of the DPS (sounder unit and receive antennas sub-systems)

are contained in Section 2 of this manual.

Coherent

Phase Modulation and Pulse Compression back to

top

The

DPS is able to be miniaturized by lengthening the transmitted

pulse beyond the pulse width required to achieve the desired

range resolution where the radar range resolution is defined

as,

DR=c/2b where b is the system bandwidth, or (1-4)

DR=cT/2 for a simple rectangular pulse

waveform, with T being the width

of the rectangular pulse

The

longer pulse allows a small low voltage solid state amplifier

to transmit an amount of energy equal to that transmitted by

a high power pulse transmitter (energy = power x time, and power

= V2/R) without having to provide components to handle

the high voltages required for tens of kilowatt power levels.

The time resolution of the short pulse is provided by intrapulse

phase modulation using programmable phase codes (user selectable

and firmware expandable), the Complementary Codes, and M-codes

are standard. The use of a Complementary Code pulse compression

technique is described in this chapter, which shows that at

300 W of transmitter power the expected measurement quality

is the same as that of a conventional sounder of about 500 kW

peak pulse power.

The

transmitted spread spectrum signal s(t) is a biphase (180° phase

reversal) modulated pulse. As illustrated in Figure 1–3,

bi-phase modulation is a linear multiplication of the binary

spreading code p(t) (a.k.a. a chipping sequence, where each

code bit is a "chip") with a carrier signal sin(2pf0t) or in complex form,

exp[j2pf0t],

to create a transmitted signal,

s(t)=p(t)exp[j2pf0t] (1-5)

Figure 1-3 Generation of a Bi-phase Modulated Spread Spectrum Waveform

NOTE

Notation throughout this chapter

will use s(t) as the transmitted signal, r(t) the received

signal and p(t) as the chip sequence. Functions r1(t)

and r2(t) will be developed to describe

the signal after various stages of processing in the receiver.

The

term chip is used rather than bit because for

spread spectrum communications many chips are required to transmit

one bit of message information, so a distinct term had to be

developed. Figure 1-4 on the following page depicts the modulation

of a sinusoidal RF carrier signal by a binary code (notice that

the code is a zero mean signal, i.e., centred around 0 volts

amplitude). Since the mixer in Figure 1-3 can be thought of

as a mathematical multiplier, the code creates a 180o

(p radians)

phase shift in the sinusoidal carrier whenever p(t) is negative,

since –sin(wt) = sin(wt+p).

The

binary spreading code is identical to a stream of data bits

except that it is designed such that it forms a pattern with

uniquely desirable autocorrelation function characteristics

as described later in this chapter. The 16-bit Complementary

Code pair used in the DPS is 1-1-0-1-1-1-1-0-1-0-0-0-1-0-1-1

modulated onto the odd-numbered pulses and 1-1-0-1-1-1-1-0-0-1-1-1-0-1-0-0

modulated onto the even-numbered pulses. This pattern of phase

modulation chips is such that the frequency spectrum of such

a signal (as shown in Figure 1-4) is uniformly spread over the

signal bandwidth, thus the term "spread spectrum".

In fact, it is interesting to note that the frequency spectrum

content of the spread spectrum signal used by the DPS is identical

to that of the higher peak power, simple short pulse used by

the DigisondeTM 256, even though the physical pulse

is 8 times longer. Since they have the same bandwidth, Equation

1–4 would suggest that they have the same range resolution.

It will be shown later in this chapter, that the ability of

the DigisondeTM 256 and the DPS to determine range

(i.e., time delay), phase, Doppler shift and angle of arrival

is also identical between the two systems, even though the transmitted

waveforms appear to be vastly different.

Figure

1–4 Spectral Content of a Spread-Spectrum Waveform

Since

the transmitted signal would obscure the detection of the much

weaker echo in a monostatic system the transmitted pulse must

be turned off before the first E-region echoes arrive at the

receiver which, as shown in Figure 1-5, is about TE

= 600 m

sec after the beginning of the pulse. Also, since the receiver

is saturated when the transmitter pulse comes on again, the

pulse repetition frequency is limited by the longest time delay

(listening interval) of interest, which is at least 5 msec,

corresponding to reflections from 750 km altitude. To meet these

constraints, a 533 m

sec pulse made

up of eight 66.67 m sec phase

code chips (15 000 chips/sec) is selected which allows detection

of ionospheric echoes starting at 80 km altitude. To avoid excessive

range ambiguity, a highest pulse repetition frequency of 200

pps is chosen, which allows reception of the entire pulse from

a virtual height of 670 km (the pulse itself is 80 km long)

altitude before the next pulse is transmitted. This timing captures

all but the highest multihop F-region echoes which are of little

interest. Under conditions where higher unambiguous ranges,

and therefore longer receiver listening intervals, are desired

100 pps or 50 pps can be selected under software control.

Figure

1-5 Natural Timing Limitations for Monostatic Vertical Incidence

Sounding

The

key to the pulse compression technique lies in the selection

of a spreading function, p(t), which possesses an autocorrelation

function appropriate for the application. The ideal autocorrelation

function for any remote sensing application is a Dirac delta

function (or instantaneous impulse, d

(t) since this would provide perfect range accuracy and infinite

resolution. However, since the Dirac delta function has infinite

instantaneous power and infinite bandwidth, the engineering

tradeoffs in the design of any remote sensing system mainly

involve how far one can afford to deviate from this ideal (or

how much one can afford to spend in more closely approximating

this ideal) and still achieve the accuracy and resolution required.

More to the point, for a discussion of a discrete time digital

system such as the DPS, the ideal signal is a complex unit impulse

function, with the phase of the impulse conveying the RF phase

of the received signal. The many different pulse compression

codes all represent some compromise in achieving this ideal,

although each code has its own advantages, limitations, and

trade-offs. The autocorrelation function as applied to code

compression in the VIS/DPS is defined as:

k

R(k)=S p(n) p(n+k) (1-6)

n

Therefore

the ideal as described above is R(k) = d(k).

(Several examples of autocorrelation functions of the codes

described in this Section can be seen in Figures 1-9 through

1-13.)

For

ionospheric applications, the received spread-spectrum coded

signal, r(t), may be a superposition of several multipath echoes

(i.e., echoes which have traveled over various propagation paths

between the transmitter and receiver) reflected at various ranges

from various irregular features in the ionosphere. The algorithm

used to perform the code compression operates on this received

multipath signal, r(t), which is an attenuated and time delayed

(possibly multiple time delays) replica of the transmitted signal

s(t) (from Equation 1–5), which can be represented as:

P

r(t)=S ai s(t-ti) or (1-7)

i=1

P

r(t)=S ai p(t-ti)exp[j2pf0t - fi]

i=1

where

S

shows that the P multipath signals sum linearly at the receive

antenna, ai is the amplitude of the ith

multipath component of the signal, and ti

is the propagation delay associated with multipath i. The carrier

phase fi

of each multipath could be expressed in terms of the carrier

frequency and the time delay t

i

; however, since the multiple carriers (from the various multipath

components) cannot be resolved, while the delays in the complex

code modulation envelope can be, a separate term, f

i,

is used. Next, when the carrier is stripped off of the signal,

this RF phase term will be represented by a complex amplitude

coefficient ai

rather than ai.

Figure

1-6 Conversion to Baseband by Undersampling

By

down-converting to a baseband signal (a digital technique is

shown in Figure 1-6), the carrier signal can be stripped away,

leaving only the superposed code envelopes delayed by P multiple

propagation paths. Figure 1-6 presents one way to strip the

carrier off a phase modulated signal. This is the screen display

on a digital storage oscilloscope looking at the RF output from

the DPS system operating at 3.5 MHz. Notice that the horizontal

scan spans 2 msec, which if the oscilloscope was capable of

presenting more than 14 000 resolvable points, would display

7 000 cycles of RF. The sample clock in the digital storage

scope is not synchronized to the DPS, however, the digital sampling

remains coherent with the RF for periods of several milliseconds.

The analog signal is digitized at a rate such that each sample

is made an integer number of cycles apart (i.e., at the same

phase point) and therefore looks like a DC level until the phase

modulation creates a sudden shift in the sampled phase point.

Therefore the 180º phase reversals made on the RF carrier show

up as DC level shifts, replicating the original modulating code

exactly. The more hardware intensive method of quadrature demodulation

with hardware components (mixers, power splitters and phase

shifters) can be found in any communications systems textbook,

such as [Peebles, 1979]. After removing the carrier, the modified

r(t), now represented by r1(t) becomes:

P

r1(t)=S ai p(t-ti) (1-8)

i=1

where

the carrier phase of each of the multipath components is now

represented by a complex amplitude a

i which carries along the RF phase term, originally defined

by f

i in Equation 1–7, for each multipath. Since

the pulse compression is a linear process and contributes no

phase shift, the real and imaginary (i.e., in-phase and quadrature)

components of this signal can be pulse compressed independently

by cross-correlating them with the known spreading code p(t).

The complex components can be processed separately because the

pulse compression (Equation 1–9B) is linear and the code

function, p(n), is all real. Therefore the phase of the cross-correlation

function will be the same as the phase of r1(t).

The

classical derivation of matched filter theory [e.g., Thomas,

1964] creates a matched filter by first reversing the time

axis of the function p(t) to create a matched filter impulse

response h(t) = p(–t). Implementing the pulse compression

as a linear system block (i.e., a "black box" with

impulse response h(t)) will again reverse the time axis of

the impulse response function by convolving h(t) with the

input signal. If neither reversal is performed (they effectively

cancel each other) the process may be considered to be a cross-correlation

of the received signal, r(t) with the known code function, p(t).

Either way, the received signal, r2(n) after matched

filter processing becomes:

r2(n)=r1(n)*h(n)=r1(n)*p(-n) (1-9A)

or by substituting

Equation 1–8 and writing out the discrete convolution,

we obtain the cross-correlation approach,

P M P

r2(n)=S ai S p(k-ti)p(k-n)=S Mai d(n-ti) (1-9B)

i=1 k=1 i=1

where

n is the time domain index (as in the sample number, n, which

occurs at time t = nT where T is the sampling interval), P is

the number of multipaths, k is the auxiliary index used to perform

the convolution, and M is the number of phase code chips. The

last expression in Equation 1–9B, the d(n), is only true if the autocorrelation

function of the selected code, p(t), is an ideal unit impulse

or "thumbtack" function (i.e., it has a value of M

at correlation lag zero, while it has a value of zero for all

other correlation lags). So, if the selected code has this property,

then the function r2(n), in Equation 1–9 is

the impulse response of the propagation path, which has a value

ai,

(the complex amplitude of multipath signal i) at each time n

= t

i (the propagation delay attributable to multipath

I).

Figure

1-7 Illustration of Complementary Code Pulse Compression

Figure

1-7 illustrates the unique implementation of Equation 1–9

employed for compression of Complementary Sequence waveforms.

A 4-bit code is used in this figure for ease of illustration

but arbitrarily long sequences can be synthesized (the DPS’s

Complementary Code is 8-chips long). It is necessary to transmit

two encoded pulses sequentially, since the Complementary Codes

exist in pairs, and only the pairs together have the desired

autocorrelation properties. Equation 1–8 (the received

signal without its sinusoidal carrier) is represented by the

input signal shown in the upper left of Figure 1-7. The time

delay shifts (indexed by n in Equation 1–9 are illustrated

by shifting the input signal by one sample period at a time

into the matched filter. The convolution shifts (indexed by

k in Equation 1–9 sequence through a multiply-and-accumulate

operation with the four ±

1 tap coefficients. The accumulated value becomes the output

function r2(n) for the current value of n. The two

resulting expressions for Equation 1–9 (an r2(n)

expression for each of the two Complementary Codes) are shown

on the right with the amplitude M=4 clearly expressed. The non-ideal

approximation of a delta function, d(n–ti), is apparent from the spurious a

and –a amplitudes. However, by summing the two r2(n)

expressions resulting from the two Complementary Codes, the

spurious terms are cancelled, leaving a perfect delta function

of amplitude 2M.

The

amplitude coefficient M in Equation 1–9 is tremendously

significant! It is what makes spread-spectrum techniques practical

and useful. The M means that a signal received at a level of

1 mv would

result in a compressed pulse of amplitude M mv, a gain

of 20 log10(M) dB. Unfortunately, the benefits of

all of that gain are not actually realized because the RMS amplitude

of the random noise (which is incoherently summed by Equation

1–9B) which is received with the signal goes up by a factor

of \/M. However, this still represents a power gain (since

power = amplitude2) equal to M, or 10log10(M)

dB. The \/M coefficient for the incoherent summation of multiple

independent noise samples is developed more thoroughly in the

following section on Coherent Spectral Integration, but the

factor of M-increase for the coherent summation of the signal

is clearly illustrated in Figure 1-7.

The

next concern is that the pulse compression process is still

valid when multiple signals are superimposed on each other as

occurs when multipath echoes are received. It seems likely that

multiple overlapping signals would be resolved since Equation

1–9 and the free space propagation phenomenon are linear

processes, so the output of the process for multiple inputs

should be the same as the sum of the outputs for each input

signal treated independently. This linearity property is illustrated

in Figure 1-8. Two 4-chip input signals, one three times the

amplitude of the other, are overlapped by two chips at the upper

left of the illustration. After pulse compression, as seen in

the lower right, the two resolved components, still display

a 3:1 amplitude ratio and are separated by two chip periods.

Figure

1-8 Resolution of Overlapping Complementary Coded Pulses

The

phase of the received signal is detected by quadrature sampling;

but, how is the complex quantity, a

i, or ai exp[fi],

related to the RF phase (fi)

of each individual multipath component? It can be shown that

this phase represents the phase of the original RF signal components

exactly. As shown in Equations 1–10 and 1–11, the

down-converting (frequency translation) of r(t) by an oscillator,

exp[j2pf0t]

results in:

P P

r1(t)=Saip(t-ti)exp[j2pf0t-jfi]exp[j2pf0t]=Saip(t-ti)exp[jfi]

i=0 i=0

(1-10)

or

P

r1(t)=Saip(t-ti) where ai=aiexp[jfi] is a complex amplitude (1-11)

i=0

This signal

maintains the parameter fi

which is the original phase of each RF multipath component.

Note that the oscillator is defined as having zero phase (exp[j2pf0t]).

Alternative

Pulse Compression Codes back to top

Due

to many possible mechanisms the pulse compression process will

have imperfections, which may cause energy reflected from any

given height to leak or spill into other heights to some degree.

This leakage is the result of channel induced Doppler, mathematical

imperfection of the phase code (except in the Complementary

Codes which are mathematically perfect) and/or imperfection

in the phase and amplitude response of the transmitter or receiver.

Several codes were simulated and analyzed for leakage from one

height to another and for tolerance to signal distortion caused

by band-limiting filters. All of the pulse compression algorithms

used are cross-correlations of the received signal with a replica

of the unit amplitude code known to have been sent. Therefore,

since Equation 1–9B represents a "cross-correlation"

(the unit amplitude function p(t) is cross-correlated with the

complex amplitude weighted version) of p(k) with itself,

it is the leakage properties of the autocorrelation functions

which are of interest.

The autocorrelation

functions of several codes were computed either on a PC or a

VAX computer for several different codes and are shown in the

following figures:

a. Complementary

Series (Figure 1-9)

b. Periodic

M-codes (Figure 1-10)

c. Non-periodic

M-codes (Figure 1-11)

d. Barker

Codes (Figure 1-12)

e. Kasami

Sequence Codes (Figure 1-13)

Figure

1-9 Autocorrelation Function of the Complementary Series

Figure

1-10 Autocorrelation Function of a Periodic Maximal Length Sequence

Figure

1-11 Autocorrelation Function of a Non-Periodic Maximal Length

Sequence

Figure

1-12 Autocorrelation Function of the Barker Code

Figure

1-13 Autocorrelation Function of the Kasami Sequence

Since

the Complementary Series pairs do not leak energy into

any other height bin this phase code scheme seemed optimum and

was chosen for the DPS’s vertical incidence measurement

mode in order to provide the maximum possible dynamic range

in the measurement. If there is too much leakage (for instance

at a –20 dB level) then stronger echoes would create a

"leakage noise floor" in which weaker echoes could

not be detectable. The autocorrelation function of the Maximal

Length Sequence (M-code) is particularly good since for M =

127, the leakage level is over 40 dB lower than the correlation

peak and the correlation peak provides over 20 dB of SNR enhancement.

However, since these must be implemented as a continuous transmission

(100% duty cycle) they are not suitable for vertical incidence

monostatic sounding. Therefore the M-Code is the code of choice

for oblique incidence bi-static sounding, where the transmitter

need not be shut off to provide a listening interval.

The

M-codes which provide the basic structure of the oblique waveform,

all have a length of M = (2N–1). The attractive

property of the M-codes is their autocorrelation function, shown

in Figure 1-10. This type of function is often referred to as

a "thumbtack". As long as the code is repeated at

least a second time, the value of the cross correlation function

at lag values other than zero is –1 while the value at

zero is M. However, if the M-Code is not repeated a second time,

i.e., if it is a pulsed signal with zero amplitude before and

after the pulse, the correlation function looks more like Figure

1-11. The characteristics of Figure 1-11 also apply if the second

repetition is modulated in phase, frequency, amplitude, code

# or time shift (i.e., starting chip). So to achieve the "clean"

correlation function with M-Codes (depicted in Figure 1-10),

the identical waveform must be cyclically repeated (i.e., periodic).

The

problem that occurs using the M-codes is if any of the multipath

signal components starts or ends during the acquisition of one

code record, then there are zero amplitude samples (for that

multipath component) in the matched filter as the code is being

pulse compressed. If this happens then the imperfect cancellation

of code amplitude (which is illustrated by Figure 1-11) at correlation

lag values other than zero will occur. In order to obtain the

thumbtack pulse compression, the matched filter must always

be filled with samples from either the last code repetition,

the current code repetition or the next code repetition (with

no significant change), since these sample values are necessary

to make the code compression work. "Priming" the channel

with 5 msec of signal before acquiring samples at the receiver

ensures that all of the multipath components will have preceding

samples to keep the matched filter loaded. Similarly after the

end of the last code repetition an extra code repetition makes

the synchronization less critical.

This

"priming" becomes costly however, for when it is desired

to switch frequencies, antennas, polarizations etc., the propagation

path(s) have to be primed again. The 75% duty cycle waveform

(X = 3) allows these multiplexed operations to occur, but as

a result, only 8.5 msec out of each 20 msec of measurement time

is spent actually sampling received signals. The 100% duty cycle

waveform (X = 4) does not allow multiplexed operation, except

that it will perform an O polarization coherent integration

time (CIT) immediately

after an X polarization CIT has been completed. Since the simultaneity

of the O/X multiplexed measurement is not so critical (the amplitude

of these two modes fade independently anyway), this is essentially

still a simultaneous measurement. Because the 100% mode performs

an entire CIT without changing any parameters, it can continuously

repeat the code sequence and therefore the channel need only

be primed before sampling the very first sample of each CIT.

After this subsequent code repetitions are primed by the previous

repetition.

Even

though the Complementary Code pairs are theoretically perfect,

the physical realization of this signal may not be perfect.

The Complementary Code pairs achieve zero leakage by producing

two compressed pulses (one from each of the two codes) which

have the same absolute amplitude spurious correlation peaks

(or leakage) at each height, but all except the main correlation

peak are inverted in phase between the two codes. Therefore,

simply by adding the two pulse compression outputs, the leakage

components disappear. Since the technique relies on the phase

distance of the propagation path remaining constant between

the sequential transmission of the two coded pulses, the phase

change vs. time caused by any movement in the channel geometry

(i.e., Doppler shift imposed on the signal) can cause imperfect

cancellation of the two complex amplitude height profile records.

Therefore, the Complementary Code is particularly sensitive

to Doppler shifts since channel induced phase changes which

occur between pulses will cause the two pulse compressions

to cancel imperfectly, while with most other codes we are only

concerned with channel induced phase changes within the duration

of one pulse. However, if given the parameters of the propagation

environment, we can calculate the maximum probable Doppler shift,

and determine if this yields acceptable results for vertical

incidence sounding.

With

200 pps, the time interval between one pulse and the next is

5 msec. If one pulse is phase modulated with the first of the

Complementary Codes, while the next pulse has the second phase

code, the interval over which motions on the channel can cause

phase changes is only 5 msec. The degradation in leakage cancellation

is not significant (i.e., less than –15 dB) until the phase

has changed by about 10 degrees between the two pulses. The

Doppler induced phase shift is:

Df=2pTfD radians (1-12)

where fD

is the Doppler shift in Hz and T is the time between pulses.

The Doppler

shift can be calculated as:

fD=(f0vr)/c< (or for a 2-way radar propagation path)

fD=(2f0vr)/c (1-13)

where

f0 is the operating frequency and vr is

the radial velocity of the reflecting surface toward or away

from the sounder transceiver. The radial velocity is defined

as the projection of the velocity of motion (v) on the

unit amplitude radial vector (r) between the radar location

and the moving object or surface, which in the ionosphere is

an isodensity surface. This is the scalar product of the two

vectors:

vr=v.r=|v|cos(q) (1-14)

A

phase change of 10° in 5 msec

would require a Doppler shift of about 5.5 Hz, or 160 m/sec

radial velocity (roughly half the speed of sound), which seldom

occurs in the ionospheric except in the polar cap region. The

8-chip complementary phase code pulse compression and coherent

summation of the two echo profiles provides a 16-fold increase

in signal amplitude, and a 4-fold increase in noise amplitude

for a net signal processing gain of 12 dB. The 127-chip Maximal

Length Sequence provides a 127-fold increase in amplitude and

a net signal processing gain of 21 dB. The Doppler integration,

as described later can provide another 21 dB of SNR enhancement,

for a total signal processing gain of 42 dB, as shown by the

following discussion.

Coherent

Doppler (Spectral or Fourier) Integration back

to top

The

pulse compression described above occurs with each pulse transmitted,

so the 12 to 21 dB SNR improvement (for 8-bit complementary

phase codes or 127-bit M-codes respectively) is achieved without

even sending another pulse. However, if the measurement can

be repeated phase coherently, the multiple returns can be coherently

integrated to achieve an even more detectable or "cleaner"

signal. This process is essentially the same as averaging, but

since complex signals are used, signals of the same phase are

required if the summation is going to increase the signal amplitude.

If the phase changes by more than 90° during

the coherent integration then continued summation will start

to decrease the integrated amplitude rather than increase it.

However, if transmitted pulses are being reflected from a stationary

object at a fixed distance, and the frequency and phase of the

transmitted pulses remain the same, then the phase and amplitude

of the received echoes will stay the same indefinitely.

The

coherent summation of N echo signals causes the signal amplitude,

to increase by N, while the incoherent summation of the noise

amplitude in the signal results in an increase in the noise

amplitude of only \/N. Therefore with each N pulses integrated,

the SNR increases by a factor of \/N in amplitude which is a

factor of N in power. This improvement is called signal processing

gain and can be defined best in decibels (to avoid the confusion

of whether it is an amplitude ratio or a power ratio) as:

Processing Gain = 20 log10 {(Sp/Qp)/ (Si/Qi)} (1-15)

where

Si is the input signal amplitude, Qi the

input noise amplitude, Sp the processed signal amplitude,

and Qp the processed noise amplitude. Q is chosen

for the random variable to represent the noise amplitude, since

N would be confusing in this discussion. This coherent summation

is similar to the pulse compression processing described in

the preceding section, where N, the number of pulses integrated

is replaced by M, the number of code chips integrated.

Another

perspective on this process is achieved if the signal is normalized

during integration, as is often done in an FFT algorithm to

avoid numeric overflow. In this case Sp is nearly

equal to Si, but the noise amplitude has been averaged.

Thus by invoking the central limit theorem [Freund, 1967 or

any basic text on probability], we would expect that as long

as the input noise is a zero mean (i.e., no DC offset) Gaussian

process, the averaged RMS noise amplitude, snp (p for processed) will

approach zero as the integration progresses, such that after

N repetitions:

snp2=sni2/N (the variance represents power) (1-16)

Since

the SNR can be improved by a variable factor of N, one would

think, we could use arbitrarily weak transmitters for almost

any remote sensing task and just continue integrating until

the desired signal to noise ratio (SNR) is achieved. In practical

applications the integration time limit occurs when the signal

undergoes (or may undergo, in a statistical sense) a phase change

of 90°. However,

if the signal is changing phase linearly with time (i.e., has

a frequency shift, Dw

), the integration

time may be extended by Doppler integration (also known as,

spectral integration, Fourier integration, or frequency domain

integration). Since the Fourier transform applies the whole

range of possible phase shifts needed to keep the phase of a

frequency shifted signal constant, a coherent summation of successive

samples is achieved even though the phase of the signal is changing.

The unity amplitude phase shift factor, e–jwt, in the Fourier Integral (shown

as Equation 1–17) varies the phase of the signal r(t) as

a function of time during integration. At the frequency (w) which

stabilizes the phase of the component of r(t) with frequency

w over the

interval of integration (i.e., makes r(t) e–jwt

coherent) the value of the integral increases with time rather

than averaging to zero, thus creating an amplitude peak in the

Doppler spectrum at the Doppler line which corresponds to w:

F[r(t)]=R(w)=òr(t)e-jwtdt (1-17)

Does

this imply that an arbitrarily small transmitter can be used

for any remote sensing application, since we can just integrate

long enough to clearly see the echo signal? To some extent this

is true. There is no violation of conservation of energy in

this concept since the measurement simply takes longer at a

lower power; however, in most real world applications, the medium

or environment will change or the reflecting surface will move

such that a discontinuous phase change will occur. Therefore

a system must be able to detect the received signal before a

significant movement (e.g., a quarter to a half of a wavelength)

has taken place. This limits the practical length of integration

that will be effective.

The

discrete time (sampled data) processing looks very similar (as

shown in Equation 1–18). For a signal with a constant frequency

offset (i.e., phase is changing linearly with time) the integration

time can be extended very significantly, by applying unity amplitude

complex coefficients before the coherent summation is performed.

This stabilizes the phase of a signal which would otherwise

drift constantly in phase in one direction or the other (a positive

or negative frequency shift), by adding or subtracting increasingly

larger phase angles from the signal as time progresses. Then

when the phase shifted complex signal vectors are added, they

will be in phase as long as that set of "stabilizing"

coefficients progress negatively in phase at the same rate as

the signal vector is progressing positively. The Fourier transform

coefficients serve this purpose since they are unity amplitude

complex exponentials (or phasors), whose only function is to

shift the phase of the signal, r(n), being analyzed.

Since

the DigisondeTM sounders have always done this spectral

integration digitally, the following presentation will cover

only discrete time (sampled data rather than continuous signal

notation) Fourier analysis.

N

F[r(t)]=R[k]=S r[n]exp[-jnk2p/N] (1-18)

n=0

where

r[n] is the sampled data record of the received signal at one

certain range bin, n is the pulse number upon which the sample

r[n] was taken, T is the time period between pulses, N is the

number of pulses integrated (number of samples r[n] taken),

and k is the Doppler bin number or frequency index. Since a

Doppler spectrum is computed for each range sampled, we can

think of the Fourier transforms as F56[w]

or F192[w]

where the subscripts signify with which range bin the resulting

Doppler spectra are associated.

By

processing every range bin first by pulse compression (12 to

21 dB of signal processing gain) then by coherent integration,

all echoes from each range have gained 21 to 42 dB of processing

gain (depending on the waveform used and the length of integration)

before any attempt is made to detect them.

NOTE

Further explanation of Equation

1–18 which can be gathered from any good reference on

the Discrete Fourier Transformation, such as [Openheim &

Schaefer, Prentice Hall, 1975], follows. The total integration

time is NT, where T is the sampling period (in the DPS, the

time period between transmitted pulses). The frequency spacing

between Doppler lines, i.e., the Doppler resolution, is 2p/NT rads/sec (or 1/NT Hz) and the entire Doppler

spectrum covers 2p/T rad/sec (with complex input samples

this is ± p/T, but with real input samples the positive and

negative halves of the spectra are mirror image replicas of

each other, so only p/T rad/sec are represented).

What

is coherently integrated by the Fourier transformation in the

DPS (as in any pulse-Doppler radar) is the time sequence of

complex echo amplitudes received at the same range (or height)

that is, at the same time delay after each pulse is transmitted.

Figure 1-14 shows memory buffers with range or time delay vertically

and pulse number (typically 32 to 128 pulses are transmitted)

horizontally which hold the received samples as they are acquired

by the digitizer. After each pulse is transmitted, one column

is filled from the bottom up at regular sampling intervals,

as the echoes from progressively higher heights are received

(33.3 msec/5 km). These columns of samples are referred to as

height profiles, which are not to be confused with electron

density profiles, but rather mirror the radar terminology of

a "slant range profile" (range becomes height for

vertical incidence sounding) which is simply the time record

of echoes resulting from a transmitted pulse. A height profile

is simply a column of numeric samples which may or may not represent

any reflected energy (i.e., they may contain only noise)

.

Figure

1-14 Eight Coherent Parallel Buffers for Simultaneous Integration

of Spectra

Complex Windowing

Function back to top

With

T, the sampling period between subsequent samples of the same

coherent process, i.e., the same hardware parameters) defined

by the measurement program, the first element of the Discrete

Fourier Transform (i.e., the amplitude of the DC component)

will have a spectral width of 1/NT. This spectral resolution

may be so wide that all Doppler shifts received from the ionosphere

fall into this one line. For instance, in the mid-latitudes

it is very rare to see Doppler shifts of more that 3 Hz, yet

with a ± 50 Hz spectrum

of 16 lines, the Doppler resolution is 6.25 Hz, so a 3 Hz Doppler

shift would still appear to show "no movement". For

sounding, it would be much more interesting if instead of a

DC Doppler line, a +3.25 Hz and a –3.25 Hz line were produced,

such that even very fine Doppler shifts would indicate whether

the motion was up or down. The DC line is a seemingly unalterable

characteristic of the FFT method of computing the Discrete Fourier

Transform, yet with a true DFT algorithm the Fourier transform

coefficients can be chosen such that, the centre of the Doppler

lines analyzed can be placed wherever the designer desires them

to be. Since the DSP could no longer keep up with the real-time

operation if the DFT algorithm were used another solution had

to be found. What was needed was a –½ Doppler line shift

which would be correct for any value of N or T.

Because

the end samples in the sampled time domain function are random,

a tapering window had to be used to control the spurious response

of the Doppler spectrum to below –40 dB (to keep the SNR

high enough to not degrade the phase measurement beyond 1°).

Therefore a Hanning function, H(n), which is a real function,

was chosen and implemented early in the DPS development. The

reader is referred to [Oppenheim and Schafer, 1975] for the

definition and applications of the Hanning function. The solution

to achieving the ½ Doppler line shift was to make the Hanning

function amplitudes complex with a phase rotation of 180° during

the entire time domain sampling period NT. The new complex Hanning

weighting function is applied simply by performing complex rather

than real multiplications. This implements a single-sideband

frequency conversion of ½ Doppler line before the FFT is

performed. In the following equation, each received multipath

signal has only one spectral component (k = Di) such

that it can be represented as, ai

exp[j2pnDi]:

P

r(n) = {Saiexp[-j2p(nDi)} |H(n)| exp[-j2p(n/2NT)]=

i=1

P

=|H(n)| S ai exp[-j2p(nDi+n/2NT) (1-19)

i=1

Multiplexing back

to top

When

sending the next pulse, it need not be transmitted at the same

frequency, or received on the same antenna with the same polarization.

With the DPS it is possible to "go off" and measure

something else, then come back later and transmit the same frequency,

antenna and polarization combination and fill the second column

of the coherent integration buffer, as long as the data from

each coherent measurement is not intermingled (all samples

integrated together must be from the same coherent statistical

process). In this way, several coherent processes can be integrated

at the same time. Figure 1-14 shows eight coherent buffers,

independently collecting the samples for two different polarizations

and four antennas. This can be accomplished by transmitting

one pulse for each combination of antenna and polarization while

maintaining the same frequency setting (to also integrate a

second frequency would require eight more buffers), in which

case, each subsequent column in each array will be filled after

each eight pulses are transmitted and received. This

multiplexing continues until all of the buffers are filled with

the desired number of pulse echo records. The DPS can keep track

of 64 separate buffers, and each buffer may contain up to 32

768 complex samples. The term "pulse" is used generically

here. For Complementary Coded waveforms a pulse actually requires

two pulses to be sent, and for 127 chip M-codes the pulse becomes

a 100% duty cycle, or CW, waveform. However, in both cases,

after each pulse compression, one complex amplitude synthesized

pulse, r2(n) in Equation 1–9 which is equivalent to a 67

msec rectangular pulse exists which can

be placed into the coherent buffer.

The

full buffers now contain a record of the complex amplitude received

from each range sampled. Most of these ranges have no echo energy;

only externally generated manmade and natural noise or interference

from radio transmitters. If a particular ionospheric layer is

providing an echo, each height profile will have significant

amplitude at the height corresponding to that layer. By Fourier

transforming each row of the coherent buffer a Doppler

spectrum describing the radial velocity of that layer will be

produced. Notice that the sampling frequency at that layer

is less than or equal to the pulse repetition frequency (on

the order of 100 Hz).

After

the sequence of N pulses is processed, the pulse compression

and Doppler integration have resulted in a Doppler spectrum

stored in memory on the DSP card for each range bin, each antenna,

each polarization, and each frequency measured (maximum of 4

MILLION simultaneously integrated samples). The program now

scans through each spectrum and selects the largest one amplitude

per height. This amplitude is converted to a logarithmic magnitude

(dB units) and placed into a new one-dimensional array representing

a height profile containing only the maximum amplitude echoes.

This technique of selecting the maximum Doppler amplitude at

each height is called the modified maximum method, or MMM. If

the MMM height profile array is plotted for each frequency step

made, this results in an ionogram display, such as the one shown

in Figure 1-15.

Figure

1-15 VI Ionogram Consisting of Amplitudes of Maximum Doppler

Lines

Angle of

Arrival Measurement Techniques back to top

Figure

1-16 Angle of Arrival Interferometry

The

DPS system uses two distinct techniques for determining the

angle of arrival of signals received on the four antenna receiver

array, an aperture resolution technique using digital beamforming

(implemented as an on-site real-time capability) and a super-resolution

technique which is accomplished when the measurement data is

being analyzed, in post-processing. Both techniques utilize

the basic principle of interferometry, which is illustrated

in Figure 1-16. This phenomenon is based on the free space path

length difference between a distant source and each of some

number of receiving antennas. The phase difference (Df) between

antennas is proportional to this free space path difference

(Dl)

based on the fraction of a wavelength represented by Dl.

Dl=dsinq and

Df=(2pDl)/l=(2p d sinq)/l (1-20)

where

q

is the zenith angle, d is the separation between antennas in

the direction of the incident signal (i.e., in the same plane

as q is measured), and l

is the free

space wavelength of the RF signal. This relationship is used

to compute the phase shifts required to coherently combine the

four antennas for signals arriving in a given beam direction,

and this relationship (solved for q)

is also the basis of determining angle of arrival directly from

the independent phase measurements made on each antenna.

Figure

1-17 shows the physical layout of the four receiving antennas.

The various separation distances of 17.3, 34.6, 30 and 60 m

are repeated in six different azimuthal planes (i.e., there

is six way symmetry in this array) and therefore, the Df

’s computed

for one direction also apply to five other directions. This

six-way symmetry is exploited by defining the six azimuthal

beam directions along the six axes of symmetry of the array,

making the beamforming computations very efficient. Section

3 of this manual contains detailed information for the installation

of receive antenna arrays.

Figure

1-17 Antenna Layout for 4-Element Receiver Antenna Array

Digital Beamforming

back to top

At

the end of the previous section it was shown that after completing

a multiplexed coherent integration there is an entire Doppler

spectrum stored for each height, each antenna, each frequency

and each polarization measured. All of these Doppler lines are

available to the beamforming algorithm. In addition, the DSP

software stores the complex amplitudes of the maximum Doppler

line at each height (i.e., the height profile in an MMM format,

is an array of 128 or 256 heights) separately for each antenna.

By setting a threshold (typically 6 dB above the noise floor),

the heights containing significant echo amplitude can quickly

be determined. These are the heights for which beam amplitudes

will be computed and a beam direction (the beam which creates

the largest amplitude at that height) declared. Due to spatial

decorrelation (an interference pattern across the ground) of

the signals received at the four antennas, it is possible that

the peak amplitude in each of the four Doppler spectra will

not appear in the same Doppler line. Therefore, to ensure that

the same Doppler line is used for each antenna (using different

Doppler lines would negate the significance of any phase difference

seen between antennas) only Antenna #1’s spectra are used

to determine which Doppler line position will be used for beamforming

at each height processed.

At

each height where an echo is strong enough to be detected, the

four complex amplitudes are passed to a C function (beam_form)

where seven beams are formed by phase shifting the four complex

samples to compensate for the additional path length in the

direction of each selected beam. If a signal has actually arrived

from near the centre of one of the beams formed, then after

the phase shifting, all four signals can be summed coherently,

since they now have nearly the same phase, so that the beam

amplitude of the sum is roughly four times each individual amplitude.

The farther the true beam direction is away from a given beam

centre the farther the phase of the four signals drift apart

and the smaller the summed amplitude. However, in the DPS system

the beams are so wide that even at the higher frequencies the

signal azimuth may deviate more than 30° from the beam centres

and the four amplitudes will still sum constructively [Murali,

1993].

The

technique for finding the angle of arrival is then simply to

compare the amplitude of the signal on each beam and declare

the direction as the beam centre of the strongest beam. Therefore

the accuracy of this technique is limited to 30° in azimuth

and 15° in elevation angle (the six azimuth beams are separated

by 60° and the oblique beams are normally set 30° away from

the vertical beam); as opposed to the Drift angle of arrival

technique described in the next section which obtains accuracies

approaching 1°. There may be some question about the amplitude

of the sidelobes of these beams, but it is really immaterial

(computation of the array pattern for 10 MHz is shown in [Murali,

1993]). The fundamental principle of this technique is that

there is no direction which can create a larger amplitude

in a given beam than the direction of the centre of that beam.

Therefore, detecting the direction by selecting the beam with